Create RAG Agents with the Power of n8n

Let’s be honest, working with AI can sometimes feel like a walk in a beautifully designed but occasionally bewildering park. Large Language Models (LLMs) are incredibly powerful, capable of generating amazing text, code, and ideas. However, they often struggle with accuracy when asked about very specific, up-to-date, or proprietary information.

This is where the concept of “hallucination” comes in – when an AI confidently presents incorrect or fabricated information. For anyone in data control, this is a major red flag. We need reliable, verifiable answers, especially when dealing with internal data, policies, or critical business operations.

Fortunately, there’s a brilliant solution called Retrieval Augmented Generation, or RAG for short. And the best part? You can easily create RAG agents without diving deep into complex code, thanks to intuitive low-code platforms like n8n. This guide will show you how to leverage n8n to build powerful, context-aware AI workflows that truly understand your data.

Understanding Retrieval Augmented Generation (RAG)

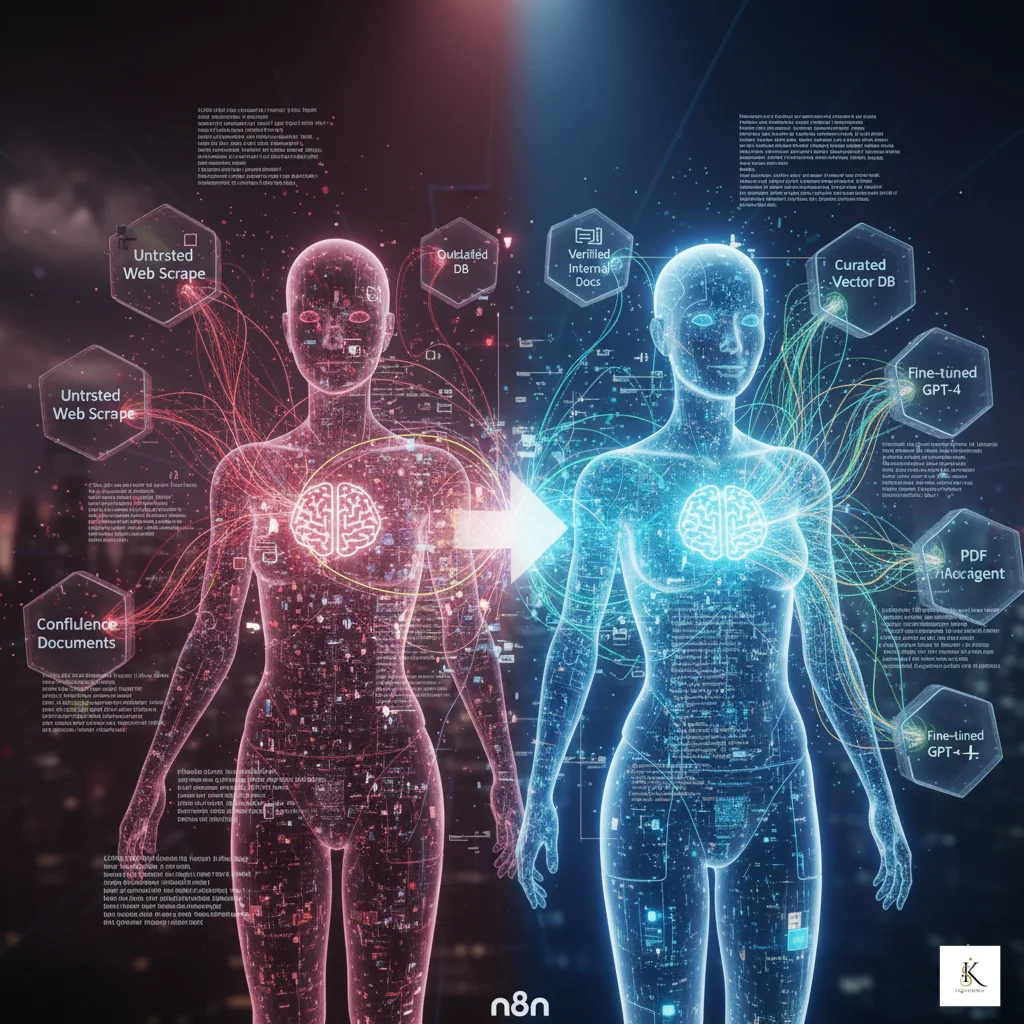

So, what exactly are RAG agents? Think of it this way: when you ask a standard LLM a question, it relies solely on the knowledge it gained during its training. This knowledge might be vast, but it’s also static and can quickly become outdated or lack specific context relevant to your organization.

RAG steps in to fix this. Before the LLM generates a response, a RAG system first retrieves relevant pieces of information from a specified external knowledge base. This could be your company’s internal documents, a private database, or specific articles you’ve curated. Once the relevant context is found, it’s then fed to the LLM along with your original question.

The LLM then uses this newly provided context to formulate a much more accurate, informed, and up-to-date answer. This process of optimizing AI with external data dramatically reduces hallucinations and ensures your AI agents speak with the voice of truth, backed by your own trusted data.

For data control professionals, this is a game-changer. It means you can deploy AI applications that not only sound smart but are also genuinely intelligent and reliable, adhering to data governance standards and utilizing your validated internal resources.

Why n8n is Your Go-To for Building RAG Workflows

You might be thinking, “This sounds great, but is it complex to set up?” Not with n8n! n8n is an open-source, low-code automation platform that makes connecting different services and building sophisticated workflows surprisingly simple. It’s an excellent choice for building RAG agents for several key reasons:

- Low-Code Accessibility: You don’t need to be a seasoned programmer to create powerful automations. n8n’s visual workflow builder lets you drag and drop nodes, making complex logic easy to design and understand.

- Extensive Integrations: n8n boasts hundreds of built-in integrations for databases (PostgreSQL, MySQL, MongoDB), cloud storage (Google Drive, S3), APIs (REST, GraphQL), and even directly with LLMs like OpenAI, Hugging Face, or custom AI services. This means your data sources and AI models can talk to each other seamlessly.

- Flexible Data Handling: From querying databases to parsing documents and manipulating text, n8n provides a rich set of nodes to prepare your data for retrieval and augmentation. This is crucial for effective n8n RAG integration.

- Workflow Automation: Once set up, your RAG workflow can run automatically, triggered by events, on a schedule, or via a webhook. This allows for continuous improvement and real-time response generation.

A Step-by-Step Guide: Building Your First RAG Agent with n8n

Ready to get practical? Let’s walk through the fundamental steps to build your own RAG agent using n8n. This process will show you how to automate retrieval augmented generation effectively.

Step 1: Define Your Data Source (Retrieval)

First, identify where your foundational knowledge lives. Is it a database of policies, a folder of PDF documents on Google Drive, or an internal knowledge base accessible via an API? n8n can connect to almost anything.

You’ll start by adding a node that can access this data. For a database, use a ‘PostgreSQL’ or ‘MySQL’ node. For documents in cloud storage, use a ‘Google Drive’ or ‘S3’ node. If it’s an internal API, the ‘HTTP Request’ node is your best friend. This node will be responsible for fetching the raw information.

Step 2: Set Up Your Retrieval Logic

Once you’ve connected to your data source, you need to retrieve the *most relevant* pieces. Simply dumping all your data into the LLM won’t work – it’s inefficient and can overwhelm the model.

This step often involves querying your data based on the user’s input. For example, if your data is in a database, you might use a ‘Code’ node or a ‘PostgreSQL’ query node to search for keywords related to the user’s question. For document-based systems, you might need to use an external service for vector embeddings and similarity search, which n8n can also integrate with via HTTP requests.

The goal here is to get a focused, small chunk of highly relevant text that the LLM can easily digest. Use ‘Split In Batches’ or ‘Item Lists’ nodes to manage your data efficiently if you’re dealing with many results.

Step 3: Integrate Your Large Language Model (Generation)

Now that you have your context, it’s time to bring in the LLM. Add an ‘OpenAI’ node, or configure an ‘HTTP Request’ node to connect to another LLM provider like Azure OpenAI, Anthropic, or a local model.

The trick here is to craft a prompt that incorporates both the user’s original question AND the context you just retrieved. Your prompt might look something like: “Using the following information: [retrieved context], please answer this question: [user’s question].” This ensures the LLM is guided by your specific data.

Make sure to map the output of your retrieval step directly into the LLM prompt within the n8n workflow. This is where the “augmentation” happens, as the LLM’s generation is enhanced by your external data.

Step 4: Assemble and Refine the Workflow in n8n

Visually, your n8n workflow might look like this:

- Start Node: Receives the user’s question (e.g., via a webhook or manual trigger).

- Data Source Node: Queries your database or fetches documents based on the question.

- Data Processing Node(s): Filters, cleans, and selects the most relevant information.

- LLM Node: Receives the cleaned data and the original question, then generates a response.

- Response Node: Sends the LLM’s answer back to the user or to another system (e.g., email, Slack, internal dashboard).

Test your workflow thoroughly with different questions and data scenarios. You’ll likely need to iterate on your retrieval logic and prompt engineering to get the best results. n8n’s visual debugger makes this process straightforward, allowing you to see the data flow at each step.

Practical Applications for Data Control Professionals

For those in data control, the ability to create RAG agents opens up a world of possibilities:

- Internal Knowledge Base Q&A: Build an AI assistant that provides accurate answers to employee questions about company policies, HR guidelines, or technical documentation, all sourced from your approved internal documents.

- Automated Compliance Checks: Develop agents that can review data inputs or reports against specific regulatory documents or internal compliance frameworks, flagging discrepancies automatically.

- Data Validation & Reporting: Create smart reporting tools that can pull specific data from your systems and generate summaries or insights, augmented by descriptive text based on your own definitions and standards.

- Enhanced Data Governance Queries: Empower users to ask natural language questions about your data assets, and receive answers grounded in your data catalog and governance policies.

By using n8n to build RAG agents, you’re not just deploying AI; you’re deploying smart, trustworthy AI that respects your data and ensures its integrity. This means more efficient operations, better decision-making, and significantly reduced risk of misinformation.

Wrapping Up: Embrace Smart, Context-Aware AI

Creating effective RAG agents is no longer just for expert developers. With n8n, anyone with a solid understanding of data—especially those in data control roles—can build sophisticated, context-aware AI applications. You gain the power of advanced language models without sacrificing accuracy or relying on outdated information.

By connecting your specific data sources to powerful LLMs through n8n’s intuitive interface, you ensure that your AI initiatives are always grounded in truth and relevance. This is how you truly harness AI: by making it a reliable, informed partner in your operations.

Why not give it a try? Start building your first RAG workflow in n8n today and experience the difference of truly intelligent, context-rich AI responses. Have you experimented with RAG agents or n8n before? Share your thoughts and experiences in the comments below!